My university is (by necessity) very strict about all of our websites and affiliated websites meeting accessibility standards for readers with disabilities (the W3C Web Content Accessibility Guidelines (WCAG) 2.0 Level AA and the Web Accessibility Initiative Accessible Rich Internet Applications Suite (WAI-ARIA) 1.0 techniques for web content). If we don’t meet certain deadlines, we will be required to disable the site. I checked our Curatescape site (renohistorical.org) with the Chrome plug-in Siteimprove, and found it to be seriously deficient. The most common problem seems to be images without alt-tags, including the location bubbles on the map, but also areas on the map itself that are coded as images. There are other issues, too, such as “Missing button in form,” “Form elements are not grouped,” and “WAI-ARIA roles” not matching “the functionality of the elements.” Is anyone else facing this situation? It seems like a rather daunting process to meet the standards, and I’m not sure we can take care of these fixes ourselves (if we can, please send instructions)!

Hi @dcurtis,

Validators aren’t always capable of understanding complex websites. While it’s true that some validators may flag a lot of issues, it’s important to understand that they’re evaluating from a very limited perspective. For example, the map is made up of tiled images which do not need alt tags (and they couldn’t be added in any case). Likewise, alt tags for images in the map bubbles aren’t especially important since they are strictly decorative in that specific context; the title of the location provides equivalent description. So it’s better for a screen reader user to actually not have an image description in that case as it would be reading out redundant information. There are many more examples of areas that have been given significant consideration regarding accessibility but which will never validate.

Some of the other issues you see are likely warnings for things out of our control (for example, “invalid links” for elements linked to URLs with Omeka-specific query parameters; these present no real barrier to usability or any other functionality, but simply don’t validate because the validator doesn’t know what they are). Other items on the report may have to do with SEO recommendations, which are not part of any standard (though Curatescape projects perform very well in real life SEO).

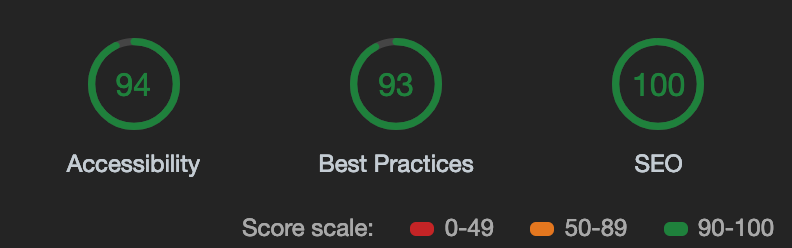

We always test against the Chrome Lighthouse tools, which currently gives us a score of 100/100 for SEO and 94/100 for accessibility and 93/100 for best practices (Reno Historical scores an 80 on best practices due to not using HTTPS, which is beyond our control since your project is self-hosted). Lighthouse is not necessarily a comprehensive testing suite but it is arguably the most practical and realistic in terms of understanding modern websites.

All that said, I’m happy to review any report you send (digitalhumanities@csuohio.edu). There is always room for improvement and I know for sure there are a few relatively small issues that do need attention. Again, send me your report and I’ll have a closer look.

Thanks – Erin